In the world of artificial intelligence and deep learning, TransformerDecoderLayer is a crucial component of transformer-based models. It plays a significant role in natural language processing (NLP), helping machines understand and generate human-like text. If you’re new to AI, you might find this term confusing—but don’t worry! This guide will break it down in simple terms so that even a 10-year-old can understand.

What Is TransformerDecoderLayer? (Explained Simply)

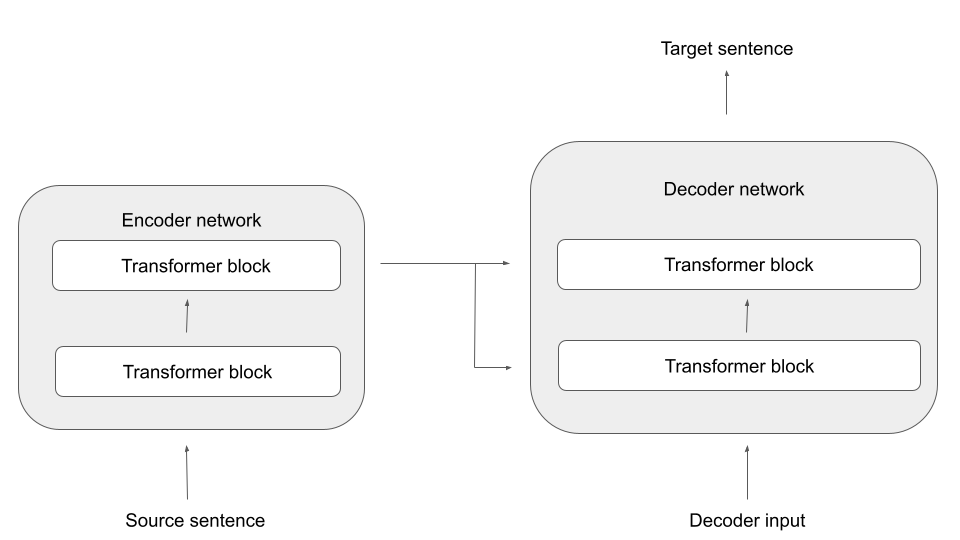

At its core, the TransformerDecoderLayer is a part of transformer models, which are widely used for tasks like language translation, text generation, and chatbots. Think of it like a smart assistant that helps computers make sense of words, sentences, and context.

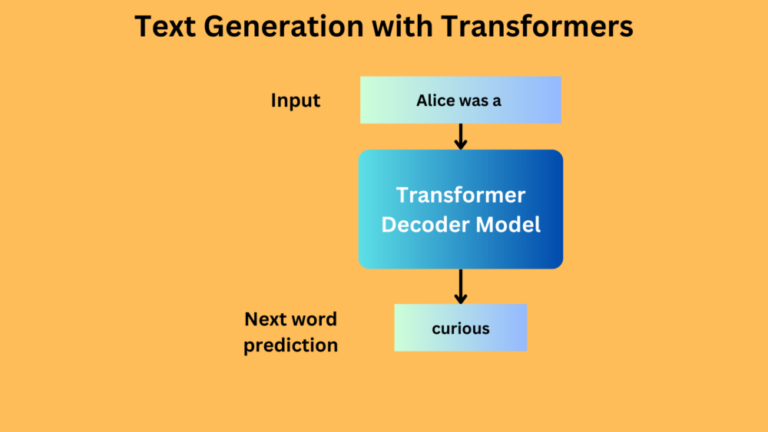

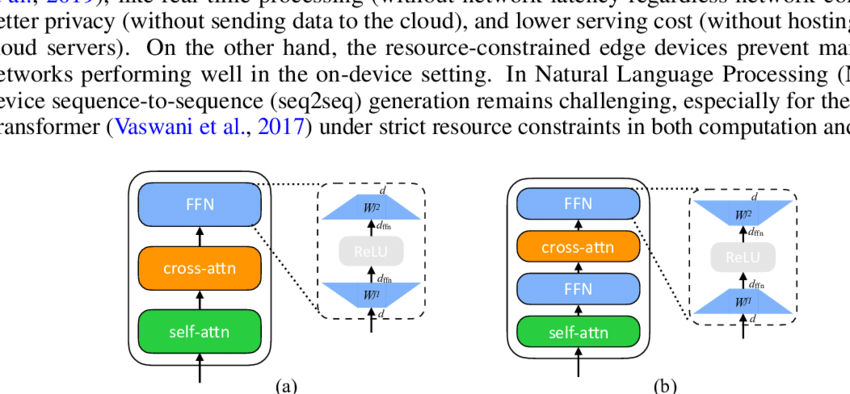

A transformer model consists of an encoder and a decoder. The encoder processes the input (like a sentence in English), and the decoder generates the output (like a translation in Spanish). The TransformerDecoderLayer is a building block inside the decoder, allowing it to analyze input data and produce meaningful output.

Why Is TransformerDecoderLayer Important?

The TransformerDecoderLayer is important because it helps AI models:

- Understand Context: Unlike older models, it doesn’t just focus on individual words—it considers the entire sentence.

- Generate Accurate Responses: It ensures AI-generated text is relevant and makes sense.

- Work Efficiently: It processes data faster than traditional models, making AI applications more powerful.

- Improve Language Models: Tools like ChatGPT, Google Translate, and AI-powered chatbots all rely on this technology.

Without TransformerDecoderLayer, modern AI models wouldn’t be as efficient, accurate, or scalable.

How Does TransformerDecoderLayer Work?

The TransformerDecoderLayer works by processing information in multiple steps. Instead of reading words one by one, like older AI models, it looks at all words at once and finds relationships between them. It does this using three main components:

Understanding Attention Mechanism

One of the most important parts of the TransformerDecoderLayer is the attention mechanism. Think of this as the brain of the model, helping it figure out which words are important in a sentence.

For example, if you say, “The cat sat on the mat, and it looked sleepy,” the model needs to understand that “it” refers to “the cat.” The attention mechanism helps the model focus on the right words and avoid confusion.

Role of Multi-Head Attention

Multi-head attention takes this concept further. Instead of focusing on just one part of the sentence, the model analyzes multiple aspects at the same time.

Imagine you’re reading a book—your brain doesn’t just process one word at a time. You remember past sentences, predict what’s coming next, and understand the emotions in the text. Multi-head attention works the same way, making AI more intelligent and responsive.

How Does Positional Encoding Help?

Unlike humans, AI models don’t naturally understand word order. This is where positional encoding comes in. It adds a unique value to each word based on its position in a sentence, helping the model understand the sequence of words.

For example, “I love my dog” and “My dog loves me” contain the same words but have different meanings. Positional encoding ensures the model understands the order correctly.

Key Features of TransformerDecoderLayer

The TransformerDecoderLayer has several features that make it powerful:

- Self-Attention Mechanism: Helps the model focus on the right words.

- Multi-Head Attention: Improves understanding by analyzing multiple aspects of a sentence.

- Layer Normalization: Ensures the model remains stable and efficient.

- Feed-Forward Networks: Helps process information faster.

- Dropout Regularization: Prevents overfitting and improves generalization.

These features make the TransformerDecoderLayer one of the most advanced tools in AI today.

Where Is TransformerDecoderLayer Used?

The TransformerDecoderLayer is used in various AI applications, including:

- Chatbots like ChatGPT, Siri, and Alexa

- Language translation tools like Google Translate

- Text summarization models used by news websites

- Speech-to-text applications like voice assistants

- AI-powered writing assistants like Grammarly

Almost every modern NLP (Natural Language Processing) model uses TransformerDecoderLayer to improve performance and efficiency.

TransformerDecoderLayer vs Other AI Models

How does TransformerDecoderLayer compare to older AI models? Let’s break it down

Why TransformerDecoderLayer Is Better?

- More Accurate: Older models, like RNNs (Recurrent Neural Networks), process words one by one. TransformerDecoderLayer processes all words at once, leading to better understanding.

- Faster Processing: Since it doesn’t rely on sequential word processing, it works much faster.

- Handles Long Sentences: Older models struggle with long texts. TransformerDecoderLayer easily handles complex sentences and paragraphs.

Does It Have Any Limitations?

While TransformerDecoderLayer is powerful, it has some limitations:

- Computationally Expensive: Requires high processing power, making it costly.

- Needs Large Datasets: Works best when trained on huge amounts of text.

- Hard to Interpret: Unlike simpler models, it’s difficult to understand why it makes certain decisions.

Despite these challenges, it remains the best choice for NLP tasks.

How to Implement TransformerDecoderLayer?

If you’re a developer, you can implement TransformerDecoderLayer using libraries like:

- TensorFlow

- PyTorch

- Hugging Face Transformers

Here’s a basic Python code snippet using PyTorch:

python

CopyEdit

import torch

import torch.nn as nn

from torch.nn import TransformerDecoderLayer

# Define the decoder layer

decoder_layer = TransformerDecoderLayer(d_model=512, nhead=8)

print(decoder_layer)

This initializes a TransformerDecoderLayer with 512 hidden units and 8 attention heads. You can then integrate it into a larger AI model.

Thoughts on TransformerDecoderLayer

The TransformerDecoderLayer is a game-changer in AI. It allows machines to understand, process, and generate human-like text with high accuracy. From chatbots to translation apps, it powers some of the most advanced AI systems in the world.

The Bottom Line

The TransformerDecoderLayer is an essential part of modern NLP models. It helps AI understand context, process language faster, and generate human-like responses. While it has some limitations, its benefits far outweigh the challenges. Whether you’re a beginner or an AI enthusiast, understanding this concept can help you appreciate the power of AI and how it shapes the future.